Tutorial 04: Uncertainty and integration (solutions)

Finn Lindgren

Source:vignettes/Tutorial04Solutions.Rmd

Tutorial04Solutions.RmdIntroduction

In this lab session you will explore

- using RMarkdown to organise text and code

- maximum likelihood estimator sampling distributions and approximate confidence interval construction

- Laplace approximation and importance sampling for approximate Bayesian credible interval construction

- Clone your

lab04-*repository from https://github.com/StatComp21/ either on your own computer (new Project from version control) or to https://rstudio.cloud - If on rstudio.cloud, setup

GITHUB_PATcredentials, like before. - Upgrade/install the

StatCompLabpackage, see https://finnlindgren.github.io/StatCompLab/ - The repository has two files,

RMDemo.Rmdandmy_code.R. Make a copy ofRMDemo.Rmd, and call itLab4.Rmd - During this lab, modify the

Lab4.Rmddocument and add new code and text commentary for the lab to the document. (You can remove the demonstration parts of the file when you don’t need them anymore, and/or keep a separate copy of it.) When pressing the “knit” button, the RMarkdown file will be run in its own R environment, so you need to include any neededlibrary()calls in a code chnk in the file, normally an initial “setup” chunk.

Three alternatives for Poisson parameter confidence intervals

Consider the Poisson model for observations : that has joint probability mass function In the week 4 lecture, two parameterisations were considered. We now add a third option:

- , and

- , and

- , and

From the week 4 lecture, we know that the inverse expected Fisher information is for case 1 and for case 2. For case 3, show that the inverse expected Fisher information is .

Solution:

For case 3, the negated log-likelihood is with 1st order derivative which shows that , and 2nd order derivative which is equal to for all values, so the inverse of the expected Hessian is .

Interval construction

Use the approximation method for large from the lecture to construct approximate confidence intervals for using each of the three parameterisations. Define three functions, CI1, CI2, and CI3, each taking paramters

-

y: a vector of observed values -

alpha: the nominal error probability of the confidence intervals

To avoid having to specify alpha in a common case, you

can use alpha = 0.05 in the function argument definition to

set a default value.

The function pmax may be useful (see its help text).

Solution:

CI1 <- function(y, alpha = 0.05) {

n <- length(y)

lambda_hat <- mean(y)

theta_interval <-

lambda_hat - sqrt(lambda_hat / n) * qnorm(c(1 - alpha / 2, alpha / 2))

pmax(theta_interval, 0)

}

CI2 <- function(y, alpha = 0.05) {

n <- length(y)

theta_hat <- sqrt(mean(y))

theta_interval <-

theta_hat - 1 / sqrt(4 * n) * qnorm(c(1 - alpha / 2, alpha / 2))

pmax(theta_interval, 0)^2

}

CI3 <- function(y, alpha = 0.05) {

n <- length(y)

theta_hat <- log(mean(y))

theta_interval <-

theta_hat - 1 / sqrt(exp(theta_hat) * n) * qnorm(c(1 - alpha / 2, alpha / 2))

exp(theta_interval)

}You can use the following code to test your functions, storing each

interval as a row of a matrix with rbind (“bind” as “rows”,

see also cbind for combining columns):

## [1] 0 2 2 2 4

CI <- rbind(

"Method 1" = CI1(y),

"Method 2" = CI2(y),

"Method 3" = CI3(y)

)

colnames(CI) <- c("Lower", "Upper")We can print the result as a table in our RMarkdown by using a

separate codechunk, calling the knitr::kable function:

knitr::kable(CI)| Lower | Upper | |

|---|---|---|

| Method 1 | 0.7604099 | 3.239590 |

| Method 2 | 0.9524829 | 3.431663 |

| Method 3 | 1.0761094 | 3.717094 |

Will all three methods always produce a valid interval? Consider the

possible values of

.

Experiment with different values of n and

lambda in the simulation of y.

Solution:

When , the first method produces a single point as “interval”.

The third method fails if , due to log of zero.

This can happen when or are close to zero.

For each approximate confidence interval construction method, we might ask the question of whether it fulfils the definition of an actual confidence interval construction method; that for all (or at least for a relevant subset of the parameter space). In coursework project 1, you will investigate the accuracy of some approximate confidence interval construction methods.

Bayesian credible intervals

Assume a true value of , and simulate a sample of of size .

Solution:

n <- 5

lambda <- 10

y <- rpois(n, lambda)

y # Actual values will depend on if set.seed() was used at the beginning of the document## [1] 11 7 11 8 8Now consider a Bayesian version of the Poisson model, with prior model that has probability density function .

One can show that the exact posterior distribution for given is a distribution (using the shape&rate parameterisation), and credible intervals can be constructed from quantiles of this distribution.

In cases where the theoretical construction is impractical, an alternative is to instead construct samples from the posterior distribution, and extract empirical quantiles from this sample. Here, we will use importance sampling to achieve this.

Let , so that . Show that the prior probability density for is .

Gaussian approximation

Solution:

Alternative 1: From probability theory, we know that , so that

Alternative 2: First, we derive the CDF: , from the CDF for the Exponential distribution. Taking the derivative with respect to gives the density , which is the needed result.

The posterior density function for is with log-density and by taking derivatives we find the mode at , and negated Hessian at the mode.

With this information we can construct a Gaussian approximation to the posterior distribution, .

Importance sampling

Simulate a sample from this Gaussian approximation of the posterior distribution, for some large , with hyperparameter .

Solution:

We need to calculate unnormalised importance weights , , Due to lack of normalisation, these “raw” weights cannot be represented accurately in the computer. To get around that issue, first compute the logarithm of the weights, , and then new, equivalent unnormalised weights .

Solution:

log_weights <- (x * (1 + sum(y)) - (a + n) * exp(x)) -

dnorm(x, mean = log(1 + sum(y)) - log(a + n), sd = 1 / sqrt(1 + sum(y)), log = TRUE)

weights <- exp(log_weights - max(log_weights))Look at the help text for the function wquantile (in the

StatCompLab package, from version 0.4.0) that computes quantiles from a

weighted sample, and construct a 95% credible interval for

using the

sample and associate weights, and then transform it into a credible

interval for

Solution:

## [1] 1.873696 2.448506

lambda_interval <- exp(theta_interval)

lambda_interval## [1] 6.512323 11.571043Cumulative distribution function comparison

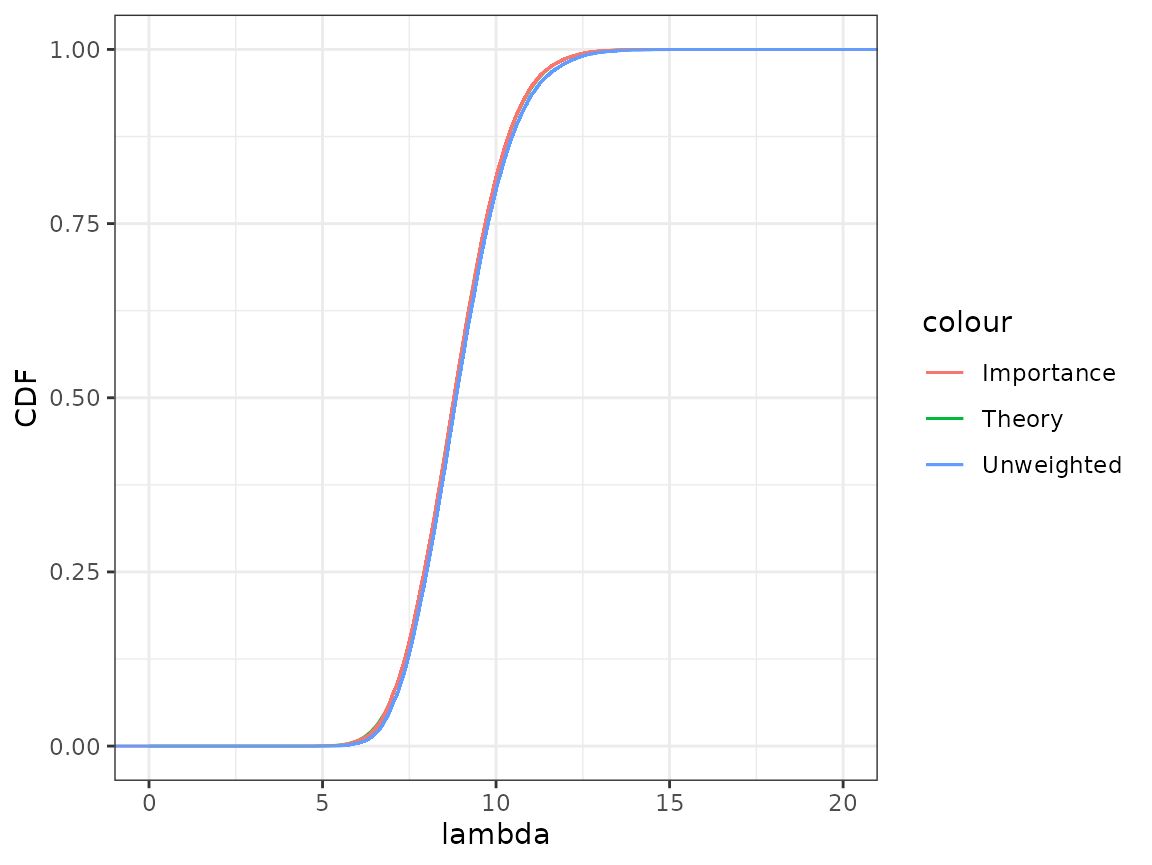

With ggplot, use geom_function to plot the

theoretical posterior cumulative distribution function for

(the CDF from the Gamma distribution given above, see

pgamma()) and compare it to the approximation given by the

importance sampling. The stat_ewcdf() function from the

StatCompLab should be used to plot the cdf for the weighted sample

,

with (unnormalised) weights

.

Also include the unweighted sample, with stat_ecwf(). How

close does the approximations come to the true posterior

distribution?

Solution:

The importance sampling version is virtually indistinguishable from the true posterior distribution. The unweighted sample is very close to the true posterior distribution; for this model, the Gaussian approximation of the posterior distribution of is an excellent approximation even before we add the importance sampling step.

ggplot(data.frame(lambda = exp(x), weights = weights)) +

xlim(0, 20) + ylab("CDF") +

geom_function(fun = pgamma, args = list(shape = 1 + sum(y), rate = a + n),

mapping = aes(col = "Theory")) +

stat_ewcdf(aes(lambda, weights = weights, col = "Importance")) +

stat_ecdf(aes(lambda, col = "Unweighted"))## Warning in geom_function(fun = pgamma, args = list(shape = 1 + sum(y), rate = a + : All aesthetics have length 1, but the data has 20000 rows.

## ℹ Please consider using `annotate()` or provide this layer with data containing

## a single row.